Scrapy框架(三)

CrawlSpider

创建CrawlSpider 的爬虫文件

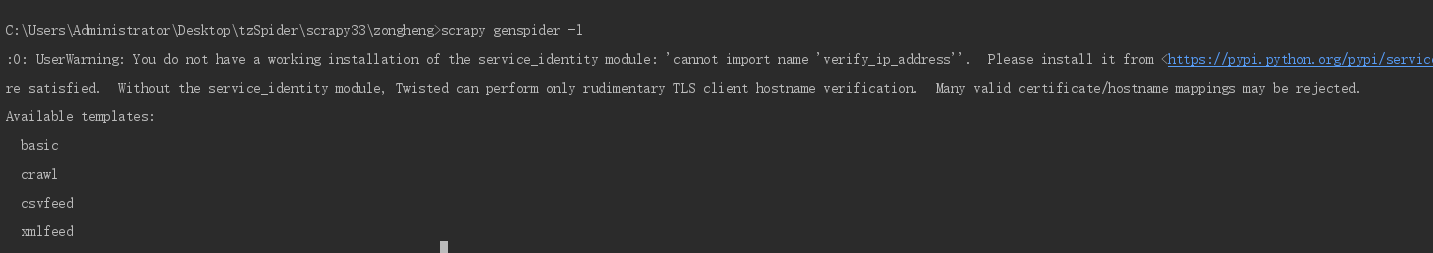

列表,列举所有的模板

命令: -t 使用模板 名字 域名

scrapy genspider -t crawl zongheng xxx.comRule

功能:Rule用来定义CrawlSpider的爬取规则

参数:

1

2(self, link_extractor=None, callback=None, cb_kwargs=None, follow=None,

process_links=None, process_request=None, errback=None):link_extractor: Link Extractor对象,它定义如何从每个已爬网页面中提取链接。

callback :回调函数 处理link_extractor形成的response

cb_kwargs : cb:callback 回调函数的参数,是一个包含要传递给回调函数的关键字参数的dict

follow :它指定是否应该从使用此规则提取的每个响应中跟踪链接。两个值:True False;follow=True link_extractor形成的response 会交给rule;False 则不会;

process_links : 用于过滤链接的回调函数 , 处理link_extractor提取到的链接

process_request : 用于过滤请求的回调函数errback:处理异常的函数

LinkExractor

LinkExractor也是scrapy框架定义的一个类

它唯一的目的是从web页面中提取最终将被跟踪的额连接。

我们也可定义我们自己的链接提取器,只需要提供一个名为extract_links的方法,它接收Response对象

并返回scrapy.link.Link对象列表。

1 | def __init__(self, allow=(), deny=(), allow_domains=(), deny_domains=(), restrict_xpaths=(),tags=('a', 'area'), attrs=('href',), canonicalize=False,unique=True, process_value=None, deny_extensions=None, restrict_css=(), strip=True, restrict_text=None): |

allow(允许): 正则表达式,或其列表 匹配url 为空 则匹配所有url

deny(不允许):正则表达式,或其列表 排除url 为空 则不排除url

allow_domains(允许的域名):str,或其列表

deny_domains(不允许的域名);str,或其列表

restrict_xpaths(通过xpath 限制匹配区域): xpath表达式 或列表

restrict_css(通过css 限制匹配区域): css表达式

restrict_text(通过text 限制匹配区域): 正则表达式

tags=(‘a’, ‘area’):允许的标签

attrs=(‘href’,):允许的属性

canonicalize:规范化每个提取的url

unique(唯一):将匹配到的重复链接过滤

process_value:接收 从标签提取的每个值 函数

deny_extensions :不允许拓展 提取链接的时候,忽略一些扩展名.jpg .xxx

案例实战-爬取纵横小说

1.需求分析

页面分析

- 二级页面 小说详情

.assets/%E7%BA%B5%E6%A8%AA%E5%B0%8F%E8%AF%B4%E9%9C%80%E6%B1%822-1596131710359.png)

- 三级页面 章节目录

.assets/%E7%BA%B5%E6%A8%AA%E5%B0%8F%E8%AF%B4%E9%9C%80%E6%B1%823-1596131717680.png)

- 四级页面 章节内容

.assets/%E7%BA%B5%E6%A8%AA%E5%B0%8F%E8%AF%B4%E9%9C%80%E6%B1%824-1596131724684.png)

页面总体结构

需求字段

- 小说信息

.assets/%E5%B0%8F%E8%AF%B4%E4%BF%A1%E6%81%AF%E6%95%B0%E6%8D%AE%E5%BA%93%E5%AD%97%E6%AE%B5-1596131734576.png)

- 章节信息

.assets/%E7%AB%A0%E8%8A%82%E4%BF%A1%E6%81%AF%E6%95%B0%E6%8D%AE%E5%BA%93%E5%AD%97%E6%AE%B5-1596131742420.png)

2.代码实现

爬取逻辑

.assets/crawlspider%E6%B5%81%E7%A8%8B-1596131751356.png)

spider文件

根据目标数据–要存储的数据,在rules中定义Rule规则,按需配置callback函数,解析response获得想要的数据。

- parse_book函数获取小说信息

- parse_catalog函数获取章节信息

- get_content函数获取章节内容

1 | # -*- coding: utf-8 -*- |

items文件

items文件里的字段,是根据目标数据的需求,

1 | import scrapy |

pipelines文件

此处是将数据写入数据库,

- 先写入小说信息

- 写入章节信息,除了章节内容之外的部分

- 根据章节url,写入章节内容

1 | # -*- coding: utf-8 -*- |

settings文件

- 设置robots协议,添加全局请求头,开启管道

- 开启下载延迟

- 配置数据库

1 | # -*- coding: utf-8 -*- |

CrawlerSpider去重

这里对链接做了条件判定,通过集合seen去重

1 | def _requests_to_follow(self, response): |